A Robot That Runs Like a Cat

Thanks to its legs, whose design faithfully reproduces feline morphology, EPFL's four-legged "cheetah-cub robot" has the same advantages as its model: it is small, light and fast. Still in its experimental stage, the robot will serve as a platform for research in locomotion and biomechanics.

|

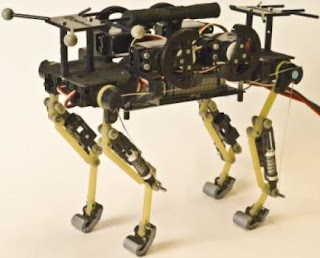

| This is cheetah-cub, a compliant quadruped robot. (Credit: (c) EPFL) |

This robot is the fastest in its category, namely in normalized speed for small quadruped robots under 30Kg. During tests, it demonstrated its ability to run nearly seven times its body length in one second. Although not as agile as a real cat, it still has excellent auto-stabilization characteristics when running at full speed or over a course that included disturbances such as small steps. In addition, the robot is extremely light, compact, and robust and can be easily assembled from materials that are inexpensive and readily available.

Faithful reproduction

The machine's strengths all reside in the design of its legs. The researchers developed a new model with this robot, one that is based on the meticulous observation and faithful reproduction of the feline leg. The number of segments -- three on each leg -- and their proportions are the same as they are on a cat. Springs are used to reproduce tendons, and actuators -- small motors that convert energy into movement -- are used to replace the muscles.

"This morphology gives the robot the mechanical properties from which cats benefit, that's to say a marked running ability and elasticity in the right spots, to ensure stability," explains Alexander Sprowitz, a Biorob scientist. "The robot is thus naturally more autonomous."

Sized for a search

According to Biorob director Auke Ijspeert, this invention is the logical follow-up of research the lab has done into locomotion that included a salamander robot and a lamprey robot. "It's still in the experimental stages, but the long-term goal of the cheetah-cub robot is to be able to develop fast, agile, ground-hugging machines for use in exploration, for example for search and rescue in natural disaster situations. Studying and using the principles of the animal kingdom to develop new solutions for use in robots is the essence of our research."

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=fea08ee9-b8b3-4fd6-840b-ef901f2d9abe)

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=72de6083-fe2a-4ca8-b2a7-f94db94e710b)

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=a3b04040-579b-489f-aba1-25ac42076b53)