Nude-Colored Hospital Gowns Could Help Doctors Better Detect Hard-to-See Symptoms

|

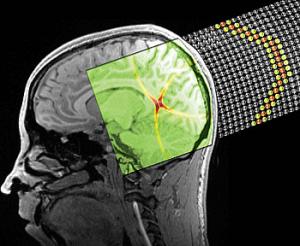

| A new study from Rensselaer Professor Mark Changizi suggests that changing the color of hospital gowns and bed sheets to match a patient’s skin color could greatly enhance the ability of a doctor or nurse to detect cyanosis and other health-related skin color changes. Perceived color on skin crucially depends on the background color. For example, the five small squares are identical in each of the above boxes. The change and gradation of the small squares, however, are much easier to identify in the box in the lower right-hand corner than the other hospital gown-colored boxes. (Credit: Mark Changizi / Rensselaer) |

"If a doctor sees a patient, and then sees the patient again later, the doctor will have little or no idea whether the patient's skin has changed color," said neurobiologist and study leader Mark Changizi, assistant professor in the Department of Cognitive Science at Rensselaer. "Small shifts in skin color can have tremendous medical implications, and we have proposed a few simple tools -- skin-colored gowns, sheets, and adhesive tabs -- that could better arm physicians to make more accurate diagnoses."

Human eyes evolved to see in color largely for the purpose of detecting skin color changes such as when other people blush, Changizi said. These emotive skin color changes are extremely apparent because humans are hard-wired to notice them, and because the background skin color remains unchanged. The contrast against the nearby "baseline" skin color is what makes blushes so noticeable, he said.

Human skin also changes color as a result of hundreds of different medical conditions.

Pale skin, yellow skin, and cyanosis -- a potentially serious condition of bluish discoloration of the skin, lips, nails, and mucous membranes due to lack of oxygen in the blood -- are common symptoms. These color changes often go unnoticed, however, because they often involve a fairly universal shift in skin color, Changizi said. The observer in most instances will just assume the patient's current skin color is the baseline color. The challenge is that there is no color contrast against the baseline for the observer to pick up on, as the baseline skin color has changed altogether.

(To hear Changizi address the age-old question of why human veins look blue, see: http://blogger.rpi.edu/approach/2010/04/26/so-why-do-our-veins-look-blue/)

One potential solution, Changizi said, is for hospitals to outfit patients with gowns and sheets that are nude-colored and closely match their skin tone. Another solution is to develop adhesive tabs in a large palette of skin-toned colors. Physicians could then choose the tabs that most closely resemble the patient's skin tone, and place the tabs at several places on the skin of the patient. Both techniques should afford doctors and clinicians an easy and effective tool to record the skin tone of a patient, and see if it deviates -- even very slightly -- from its "baseline" color over time.

"If a patient's skin color shifts a small amount, the change will often be imperceptible to doctors and nurses," Changizi said. "If that patient is wearing a skin-colored gown or adhesive tab, however, and their skin uniformly changes slightly more blue, the initially 'invisible' gown or tab will appear bright and yellow to the observer."

While there are devices for specifically measuring the oxygen content of blood to help detect the onset of cyanosis, Changizi said the color recognition offered by the color-matched adhesive tabs and hospital gowns would be another tool to tip off the clinician that there is even a need to measure blood oxygen content. The color-matched tabs and gowns would also benefit many hospital departments, as well as international hospitals, which lack equipment to measure blood oxygen content, he said.

Changizi's findings are detailed in the paper "Harnessing color vision for visual oximetry in central cyanosis," published in the journal Medical Hypotheses. The complete paper may be viewed online at Changizi's Web site at: http://www.changizi.com/colorclinical.pdf.

Last year, Changizi's eye-opening book, The Vision Revolution: How the Latest Research Overturns Everything We Thought We Knew About Human Vision, hit store shelves. Published by BenBella Books, The Vision Revolution investigates why vision has evolved as it has over millions of years, and challenges theories that have dominated the scientific literature for decades.

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=906f823d-162b-4639-8032-d46f4d21323d)

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=45339d29-f93e-4baa-b294-0fbe5afded2a)

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=8c76fe36-fefd-4a95-a474-b57085bdd6d0)